Source: VICE

Reading Time: 3 minutes

Summary:

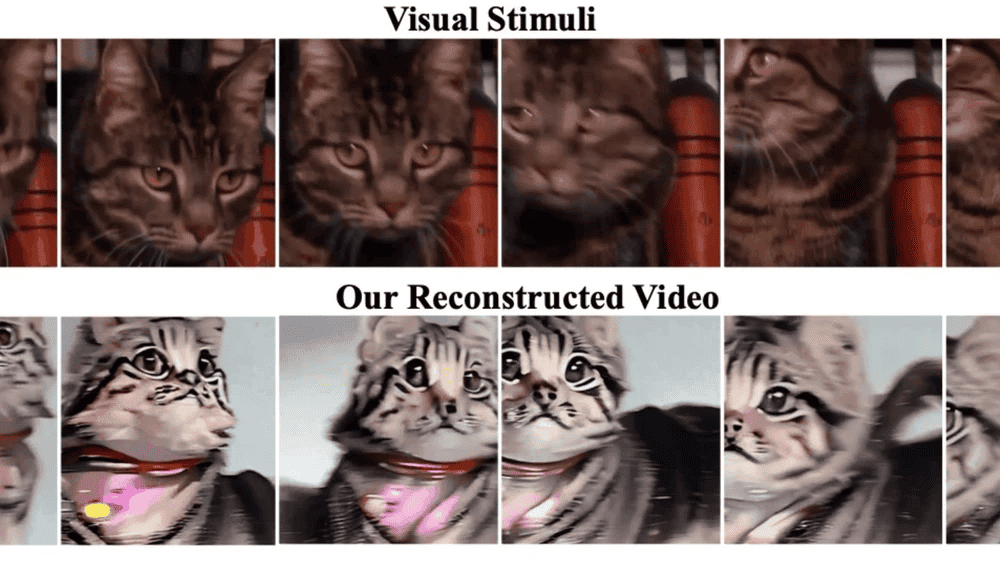

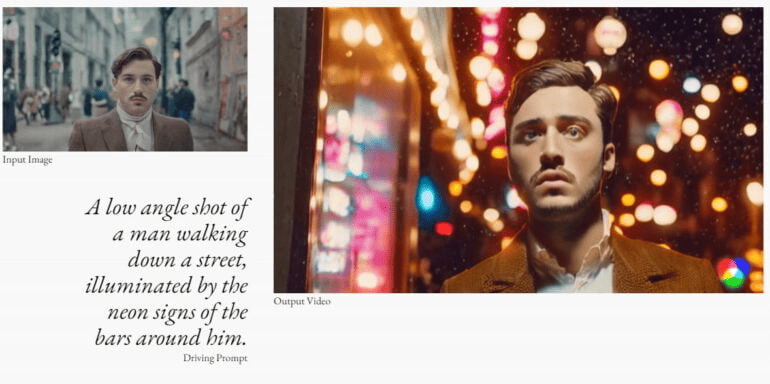

In a significant advancement in generative AI technology, researchers from the National University of Singapore and The Chinese University of Hong Kong have developed a model named MinD-Video that can reconstruct high-quality videos based on brain activity. Using fMRI data and the text-to-image AI model Stable Diffusion, the team created AI-generated videos that showed a remarkable similarity to the videos watched by test subjects. The system’s “two-module pipeline” comprises a trained fMRI encoder and a refined version of Stable Diffusion. The team reported an accuracy rate of 85 percent for the reconstructed videos, surpassing previous methods. This research can have potential applications in neuroscience and brain-computer interfaces. The study also revealed three significant findings, shedding light on the workings of the visual cortex, the hierarchical operation of the fMRI encoder, and its capability to handle more detailed information with continued training.